Traffic Profiling and Performance Instrumentation For On-Chip Interconnects

On-chip bus interconnect fabrics have become critical sub-systems in SoC platforms. Not only do they need to be functionally correct, but they also need to deliver the performance demanded by user applications. This paper describes a proposal for the specification of bus master traffic profiles and system level traffic scenarios, together with the definition of performance metrics that need to be instrumented to ensure that an interconnect is meeting its performance targets.

-

Introduction

Nearly all Systems on a Chip (SoCs) are implemented using an internal architecture where at least one CPU core is connected to a number of hardware resources via an internal interconnect based on a standard on-chip bus protocol such as AMBA. Most design IP is designed with one or more on-chip bus interface sockets so that it can easily be integrated into a SoC by connecting it to one or more counter-part sockets on the SoCs interconnect fabric. The success of this approach has meant that designs that started off as relatively simple specialized microprocessors, with a CPU core and a few peripherals, have now evolved into complex flexible computing platforms.

At the same time the software that runs on the SoC has evolved from closed firmware supporting limited device functionality to multiple operating systems capable of running third party applications. The fact that the SoC has effectively become an open computing platform means that it is becoming increasingly important to validate that the platform will be able to support multiple use models.

Each of these scenarios will use different combinations of the on-chip hardware resources and each will place performance demands on the interconnect at the core of the SoC. If the interconnect does not deliver on bandwidth in these different situations, then the user experience will suffer and ultimately will cause the end product to fail in the marketplace.

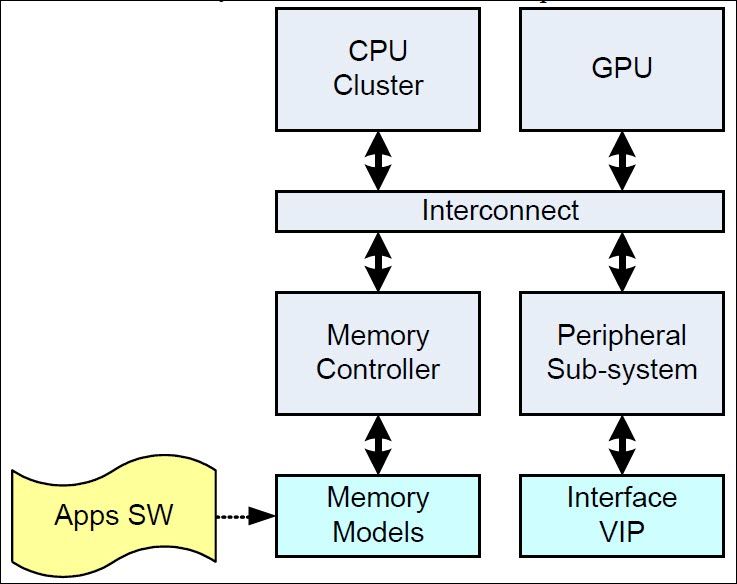

Figure 1: Representation of a SoC Verification Environment

A. Validation Process

Interconnect performance is something that is considered at the architectural level and spreadsheets are commonly used to arrive at a first order approximation to latency and bandwidth requirements. ESL tools, modeling the interconnect in SystemC, are also used to analyze system architectures and performance requirements. However, creating a model of a sophisticated interconnect with enough timing accuracy to get realistic results is a complex task and it is usually easier to work with the actual interconnect RTL.

Typically, the interconnect fabric is generated according to the specification and performance budgets estimated by the system architect. Then it is integrated with the rest of the design IP that makes up the SoC. The functionality of the fabric can be verified stand alone or as part of the wider device. The performance of the interconnect is usually checked when the full SoC, or sub-system, is in place and verified by running some use case scenarios using the RTL and at least part of the application code.

This is represented by the diagram in Figure 1 (above) where the application software is loaded into memory and then executed on the CPU cluster causing a series of transfers to take place across the interconnect. Setting up one of these use case scenarios relies on having all or most of the SoC in place and having access to software that is mature enough to support the test case. Therefore, running the scenario is something that happens fairly late in the frontend implementation cycle, arguably too late to be effective. Running the scenario with the SoC RTL and the application software is very resource intensive and may take considerable time to execute, exploring variations around a particular scenario will take even longer.

Experience shows that doing an early stand-alone functional check of the interconnect structure can quickly isolate specification and implementation problems which would be difficult to find within the context of an integrated SoC design.

-

Download Paper

-

Traffic Profiling and Performance Instrumentation For On-Chip Interconnects

Verification IP Feb 26, 2013 pdf

-